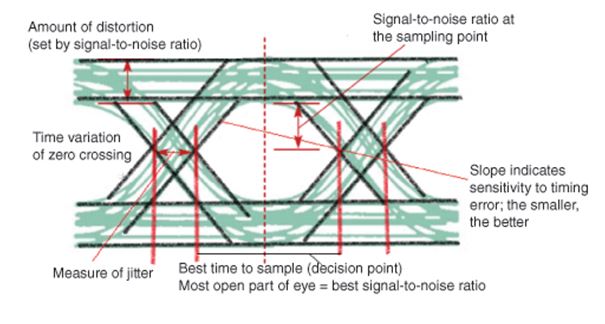

Though a detailed explanation of jitter can be highly complex mathematically, simply put, jitter is the deviation of a signal from its ideal periodic behavior in terms of frequency, signal amplitude, or phase. There are many contributors to jitter in a signal, from the source itself to outside interferers. Additionally, jitter can be described similarly to other time-varying signals, as RMS, peak-to-peak, and even in terms of spectral density. Occasionally, jitter is defined by its variance, as well.

Common to telecommunications, the unit interval (UI) description is used to define the depths of the jitter deviation referenced to the ideal period of a bit. Conveniently, this method scales with the signal frequency, and can facilitate comparison of jitter with varying frequency signals. As jitter is a time-varying signal, it does have a magnitude and frequency component. So, some representations use a polar representation of jitter in degrees or radians.

Some potential jitter sources include, thermal noise, EMI, RFI, and noise generation effects in semiconductor materials. Ultimately, these effects change the position of the edges in digital data streams demodulated from RF signals and can introduce data errors if the jitter exceeds the system tolerance. Signals with higher data rates generally have less tolerance to these types of timing errors and variations.

Pasternack Blog

Pasternack Blog